Topics of Interest

Differentiable games / Minmax optimization / 2-player games

Internal Presentations & Invited Talks*

- Intro I: General problem & colab playlab. Tatjana Chavdarova and Matteo Pagliardini, EPFL. 05.10.2020. slides, colab.

- Intro II: How minmax differs from minimization? Thomas Pethick, EPFL. (paper covered: The Mechanics of n-Player Differentiable Games) 12.10.2020. slides. Paper details ↴

- The limits of min-max optimization algorithms: convergence to spurious non-critical points. 19.10.2020 & 26.10.2020. Ya-Ping Hsieh, ETH. paper, slides. Paper abstract ↴

- A Unified Analysis of Extra-gradient and Optimistic Gradient Methods for Saddle Point Problems: Proximal Point Approach. Tatjana Chavdarova, EPFL. 02.11.2020. paper covered, slides. Paper details ↴

- Adversarial Example Games & VI perspective on GANs. Gauthier Gidel, Mila. 09.11.2020. Papers: AEGs & VI perspective, slides. Abstract & Bio ↴

- A Closer Look at the Optimization Landscapes of GANs. Hugo Berard, Mila. 16.11.2020. paper, slides. Abstract & Bio ↴

- LEAD: Least-Action Dynamics for Min-Max Optimization. Reyhane Hemmat, Mila. 23.11.2020. paper, slides. Abstract & Bio ↴

- Stochastic Hamiltonian Gradient Methods for Smooth Games. Nicolas Loizou, Mila. 30.11.2020. paper, slides. Abstract & Bio ↴

- The Complexity of Constrained Min-Max Optimization. Manolis Zampetakis, UC Berkeley. 14.12.2020. paper, slides. Abstract & Bio ↴

- Lipschitz Generative Adversarial Nets. Igor Krawczuk, EPFL. 21.12.2020. paper covered, slides. Paper details ↴

- Implicit Learning Dynamics in Stackelberg Games. Tanner Fiez, University of Washington. 15.03.2021. paper, slides. Abstract & Bio ↴

- Min-max Problem in Adversarial Training & Understanding and Improving Fast Adversarial Training. Maksym Andriushchenko, EPFL. 22.03.2021. paper, slides. Abstract & Bio ↴

The Mechanics of n-Player Differentiable Games. David Balduzzi, Sebastien Racaniere, James Martens, Jakob Foerster, Karl Tuyls, and Thore Graepel. ICML, 2018.

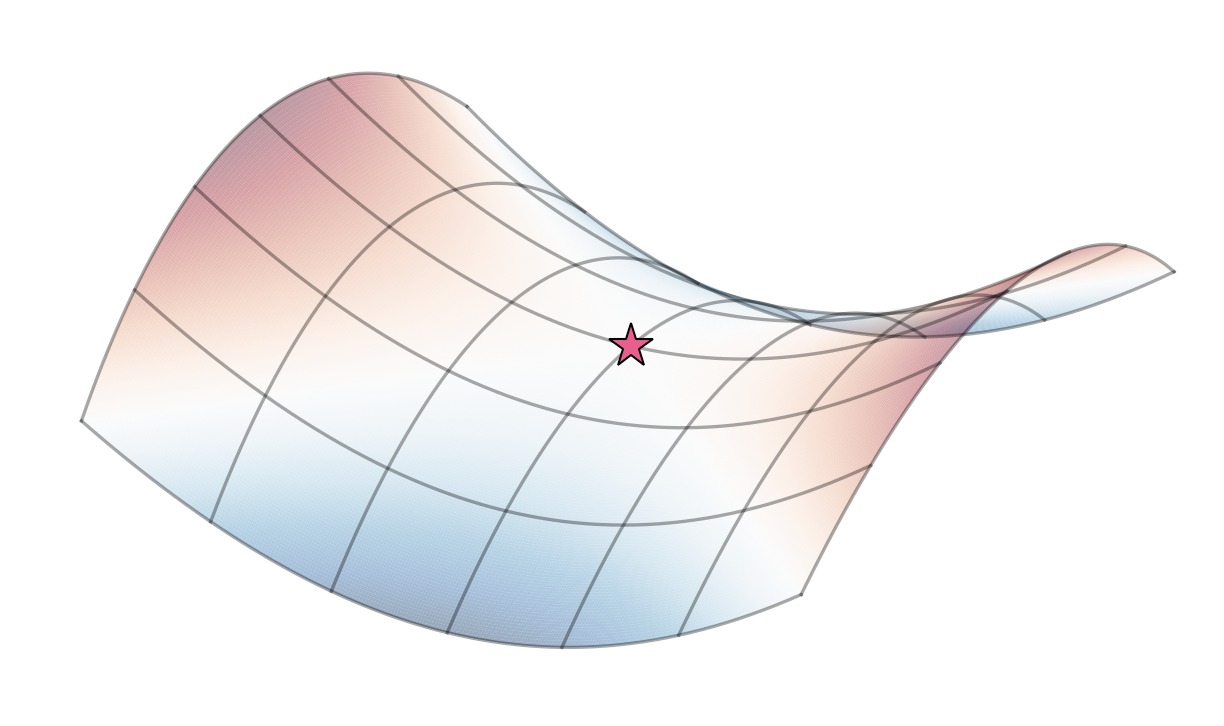

Abstract. The cornerstone underpinning deep learning is the guarantee that gradient descent on an objective converges to local minima. Unfortunately, this guarantee fails in settings, such as generative adversarial nets, where there are multiple interacting losses. The behavior of gradient-based methods in games is not well understood―and is becoming increasingly important as adversarial and multiobjective architectures proliferate. In this paper, we develop new techniques to understand and control the dynamics in general games. The key result is to decompose the second-order dynamics into two components. The first is related to potential games, which reduce to gradient descent on an implicit function; the second relates to Hamiltonian games, a new class of games that obey a conservation law, akin to conservation laws in classical mechanical systems. The decomposition motivates Symplectic Gradient Adjustment (SGA), a new algorithm for finding stable fixed points in general games. Basic experiments show SGA is competitive with recently proposed algorithms for finding stable fixed points in GANs―whilst at the same time being applicable to―and having guarantees in―much more general games.

The limits of min-max optimization algorithms: convergence to spurious non-critical points. Ya-Ping Hsieh, Panayotis Mertikopoulos, Volkan Cevher. ArXiv, 2020.

Abstract. Compared to minimization problems, the min-max landscape in machine learning applications is considerably more convoluted because of the existence of cycles and similar phenomena. Such oscillatory behaviors are well-understood in the convex-concave regime, and many algorithms are known to overcome them. In this paper, we go beyond the convex-concave setting and we characterize the convergence properties of a wide class of zeroth-, first-, and (scalable) second-order methods in non-convex/non-concave problems. In particular, we show that these state-of-the-art min-max optimization algorithms may converge with arbitrarily high probability to attractors that are in no way min-max optimal or even stationary. Spurious convergence phenomena of this type can arise even in two-dimensional problems, a fact which corroborates the empirical evidence surrounding the formidable difficulty of training GANs.

A Unified Analysis of Extra-gradient and Optimistic Gradient Methods for Saddle Point Problems: Proximal Point Approach. Aryan Mokhtari, Asuman Ozdaglar, Sarath Pattathil. ArXiv, 2019.

Abstract. In this paper we consider solving saddle point problems using two variants of Gradient Descent-Ascent algorithms, Extra-gradient (EG) and Optimistic Gradient Descent Ascent (OGDA) methods. We show that both of these algorithms admit a unified analysis as approximations of the classical proximal point method for solving saddle point problems. This viewpoint enables us to develop a new framework for analyzing EG and OGDA for bilinear and strongly convex-strongly concave settings. Moreover, we use the proximal point approximation interpretation to generalize the results for OGDA for a wide range of parameters.

Abstract. Game-theoretic formulation of machine learning tasks has now great success in the past couple of years. In this talk, I will first introduce Adversarial Example Games (AEG), a framework that models the crafting of adversarial examples as a min-max game between a generator of attacks and a classifier. Then I will present a Variational Inequality perspective on the optimization of GAN and AEG.

Bio. Gauthier Gidel is an assistant professor at Université de Montréal (UdeM) at DIRO and a core faculty member of Mila. He is a recipient of the 2019 Borealis graduate fellowship program. He is broadly interested in the theoretical understanding of learning (such as generalization in deep learning) and game formulations of ML tasks such as GANs and adversarial examples.

Abstract. Theory on smooth games optimization often relies on assumptions that do not hold when the players are parametrized as deep neural networks and have non-convex loss functions. In particular, theoretical works highlight several limitations of “standard” methods (i.e. GDA) for solving games, however those methods are still widely used in practice with great success (e.g. GANs). This talk will try to investigate this gap between theory and practice in the context of GANs, by attempting to answer the following questions: “Do GANs actually suffer from rotational dynamics? And do they converge to Local Nash Equilibrium?”. To do so Hugo will introduce several visualization tools that can be useful to diagnose optimization problems that might arise in games.

Bio. Hugo Berard is a PhD student at Mila and Facebook AI Research under the supervision of Pascal Vincent. His work lies at the intersection of Games and Deep Learning where he tries to understand the optimization challenges that arise in games. Recently, he has been interested in finding connections between games and different fields of machine learning, by formulating machine learning problems as smooth games and deriving new algorithms to solve them.

Abstract. Existing optimization methods for two-player min-max games typically employ intuitive, carefully hand-designed mechanisms for controlling the problematic rotational dynamics commonly encountered during optimization. In this work, we take a novel approach to address this issue by casting min-max optimization as a physical system. We propose LEAD (Least-Action Dynamics), which uses the principle of least-action from physics to discover an efficient optimizer for min-max games. We provide theoretical and empirical analysis that demonstrate the strong performance of our method.

Bio. Reyhane is a PhD student at Mila lab, Université de Montréal. She works under the supervision of Ioannis Mitliagkas and Nicolas Le Roux. Her research interests are in the intersection of machine learning, large scale optimization, and game theory.

Abstract. The success of adversarial formulations in machine learning has brought renewed motivation for smooth games. In this work, we focus on the class of stochastic Hamiltonian methods and provide the first convergence guarantees for certain classes of stochastic smooth games. We propose a novel unbiased estimator for the stochastic Hamiltonian gradient descent (SHGD) and highlight its benefits. Using tools from the optimization literature we show that SHGD converges linearly to the neighborhood of a stationary point. To guarantee convergence to the exact solution, we analyze SHGD with a decreasing step-size and we also present the first stochastic variance reduced Hamiltonian method. Our results provide the first global non-asymptotic last-iterate convergence guarantees for the class of stochastic unconstrained bilinear games and for the more general class of stochastic games that satisfy a “sufficiently bilinear” condition, notably including some non-convex non-concave problems. We supplement our analysis with experiments on stochastic bilinear and sufficiently bilinear games, where our theory is shown to be tight, and on simple adversarial machine learning formulations.

Bio. Nicolas Loizou is an IVADO Post-doctoral Research Fellow at Mila - Quebec Artificial Intelligence Institute and the Department of Computer Science and Operations Research (DIRO), Université de Montréal. Before joining Mila, he was a graduate student at The University of Edinburgh, School of Mathematics where he received his Ph.D. degree in Optimization and Operational Research in 2019. Nicolas's current research focuses on the theory and applications of convex and non-convex optimization in large-scale machine learning and data science problems.

Abstract. In multi-agent learning applications, the role of single-objective optimization is played by equilibrium computation, yet our understanding of its complexity in settings that are relevant for Deep Learning remains sparse. In this talk, we focus on min-max optimization of nonconvex-nonconcave objectives, which has found applications in GANs, and other adversarial learning problems. Here, not only are there no known gradient-descent based methods converging to even local and approximate min-max equilibria, but the computational complexity of identifying them remains poorly understood. We show that finding approximate local min-max equilibria of Lipschitz and smooth objectives requires a number of queries to the function and its gradient that is exponential in the relevant parameters, in sharp contrast to the polynomial number of queries required to find approximate local minima of non-convex objectives. Our oracle lower bound is a byproduct of a complexity-theoretic result showing that finding approximate local min-max equilibria is computationally equivalent to finding Brouwer fixed points, and Nash equilibria in non zero-sum games, and thus PPAD-complete. Minimal complexity theory knowledge will be assumed in the talk.

Bio. Manolis Zampetakis is a postdoc at the Department of Electrical Engineering and Computer Science at the University of California at Berkeley working with Michael Jordan. Before Berkeley, he completed his PhD at the Department of Electrical Engineering and Computer Science at Massachusetts Institute of Technology (MIT) under the supervision of Constantinos Daskalakis. He has completed his undergraduate studies at NTUA. During his graduate studies has been awarded the Google PhD Fellowship.

Lipschitz Generative Adversarial Nets. Zhiming Zhou, Jiadong Liang, Yuxuan Song, Lantao Yu, Hongwei Wang, Weinan Zhang, Yong Yu, Zhihua Zhang. ICML, 2019.

Abstract. In this paper, we study the convergence of generative adversarial networks (GANs) from the perspective of the informativeness of the gradient of the optimal discriminative function. We show that GANs without restriction on the discriminative function space commonly suffer from the problem that the gradient produced by the discriminator is uninformative to guide the generator. By contrast, Wasserstein GAN (WGAN), where the discriminative function is restricted to 1-Lipschitz, does not suffer from such a gradient uninformativeness problem. We further show in the paper that the model with a compact dual form of Wasserstein distance, where the Lipschitz condition is relaxed, may also theoretically suffer from this issue. This implies the importance of Lipschitz condition and motivates us to study the general formulation of GANs with Lipschitz constraint, which leads to a new family of GANs that we call Lipschitz GANs (LGANs). We show that LGANs guarantee the existence and uniqueness of the optimal discriminative function as well as the existence of a unique Nash equilibrium. We prove that LGANs are generally capable of eliminating the gradient uninformativeness problem. According to our empirical analysis, LGANs are more stable and generate consistently higher quality samples compared with WGAN.

Implicit Learning Dynamics in Stackelberg Games: Equilibria Characterization, Convergence Analysis, and Empirical Study. Tanner Fiez, Benjamin Chasnov, Lillian Ratliff. ICML, 2020.

Abstract. Continuous games traditionally arise in domains including economics and mechanism design and now they commonly emerge from machine learning problems. Often, the underlying game in such formulations exhibits an order of play between the players either explicitly or implicitly. Motivated by this interaction structure, in a series of recent works, we study continuous games from a Stackelberg game perspective. Stackelberg games are a class of games distinguished by the ability of a player deemed the leader to announce a strategy to which a player deemed the follower can then best-respond and the typical equilibrium notion is known as a Stackelberg equilibrium. In this talk, I will begin by presenting a local refinement of the traditional Stackelberg equilibrium notion, a characterization of this solution concept in terms of gradient-based sufficient conditions, and the connections to the analogous local Nash equilibrium concept and its gradient-based sufficient conditions. Following this, I will present gradient-based learning dynamics which mirror the underlying structure of a Stackelberg game using the implicit function theorem and present the local stability and convergence guarantees we obtain for this algorithm. Building off of this, I will present our work studying the connections between simultaneous gradient descent and the equilibrium concept we focus on. In particular, we characterize the impact that timescale separation between the players has on the local stability and convergence guarantees around local Stackelberg equilibria. Our results on this topic also reveal important insights into more structured classes of games where global convergence guarantees are obtainable.

Bio. Tanner Fiez is a 5th year Ph.D. student in the Department of Electrical and Computer Engineering at the University of Washington advised by Professor Lillian Ratliff. He is a recipient of a National Defense Science and Engineering Graduate Fellowship. Prior to coming to the University of Washington, Tanner obtained his Bachelor’s of Science in Electrical and Computer Engineering from Oregon State University. Tanner’s primary research interests are in learning dynamics in games and online decision-making problems. In the area of game theory, he has worked on analyzing gradient-based learning dynamics in continuous games and online learning dynamics in finite strategy games. In the area of online decision-making, he has done research on multi-armed bandit algorithms in a variety of settings and also on designing sequential decision-making policies to balance multiple objectives in market-based applications.

Min-max Problem in Adversarial Training & Understanding and Improving Fast Adversarial Training. Maksym Andriushchenko, Nicolas Flammarion. NeurIPS, 2020.

Abstract. A recent line of work focused on making adversarial training computationally efficient for deep learning models. In particular, Wong et al. (2020) showed that l∞ adversarial training with fast gradient sign method (FGSM) can fail due to a phenomenon called "catastrophic overfitting", when the model quickly loses its robustness over a single epoch of training. We show that adding a random step to FGSM, as proposed in Wong et al. (2020), does not prevent catastrophic overfitting, and that randomness is not important per se -- its main role being simply to reduce the magnitude of the perturbation. Moreover, we show that catastrophic overfitting is not inherent to deep and overparametrized networks, but can occur in a single-layer convolutional network with a few filters. In an extreme case, even a single filter can make the network highly non-linear locally, which is the main reason why FGSM training fails. Based on this observation, we propose a new regularization method, GradAlign, that prevents catastrophic overfitting by explicitly maximizing the gradient alignment inside the perturbation set and improves the quality of the FGSM solution. As a result, GradAlign allows to successfully apply FGSM training also for larger l∞-perturbations and reduce the gap to multi-step adversarial training.

Bio. Maksym Andriushchenko is a second-year PhD student in computer science at EPFL in Switzerland. He obtained his MSc from Saarland University, Germany. His research mainly focuses on how to make machine learning algorithms adversarially robust and improve their reliability. Maksym has published seven papers at major machine learning and computer vision conferences (NeurIPS, ICLR, AISTATS, CVPR, and ECCV).

Recordings are available for internal EPFL members only and for a limited time (use above email to request).

* Paper covered denotes that a member of our reading group presented a paper which (s)he did not (co)author. Where omitted a (co)author of the listed paper is the presenter.